Related Blogs

Table of Content

- Why is AWS More Expensive?

-

AWS Cost Optimization Best Practices

- Perform Cost Modeling

- Monitor Billing Console Dashboard

- Create Schedules to Turn Off Unused Instances

- Supply Resources Dynamically

- Optimizing Your Cost with Rightsizing Recommendations

- Utilize EC2 Spot Instances

- Optimizing EC2 Auto Scaling Groups (ASG) Configuration

- Compute Savings Plans

- Delete Idle Load Balancers

- Identify and Delete Orphaned EBS Snapshots

- Handling AWS Chargebacks for Enterprise Customers

- AWS Tools for Cost Optimization

- Conclusion

Key Takeaways

- AWS Cloud is a widely used platform and offers more than 200 services. These cloud resources are dynamic in nature and their cost is difficult to manage.

- There are various tools available like AWS Billing Console, AWS Trusted Advisor, Amazon CloudWatch, Amazon S3 Analytics, AWS Cost Explorer, etc. that can help in cost optimization.

- AWS also offers flexible purchase options for each workload. So that one can improve resource utilization.

- With the help of Instance Scheduler, you can stop paying for the resources during non operating hours.

- Modernize your cloud architecture by scaling microservices architectures with serverless products such as AWS Lambda.

In today’s tech world where automation and cloud have taken over the market, the majority of software development companies are using modern technologies and platforms like AWS for offering the best services to their clients and to have robust in-house development.

In such cases, if one wants to stay ahead of the competition and offer services to deliver efficient business values at lower rates, cost optimization is required. Here, we will understand what cost optimization is, why it is essential in AWS and what are the best practices for AWS cost optimization that organizations need to consider.

1. Why is AWS More Expensive?

The AWS cloud is the widely used platform by software development companies that offers more than 200 services to their clients. This cloud resource is dynamic in nature and because of this, its cost is difficult to manage and is unpredictable. Besides this, here are some of the main reasons that make AWS a more expensive platform to use for any organization.

- When any business organization does not use Elastic Block Store (EBS) volumes, load balancers, snapshots, or some other resources, they will have to pay more as these resources will still be incurring costs whether you use them or not.

- Some businesses are paying for computer instance services like Amazon EC2 but not utilizing them properly.

- Reserved or spot instances are not used when they are required and these types of instances generally offer discounts of 50-90%.

- Sometimes it happens that the auto-scaling feature is not implemented properly or is not optimal for the business. For instance, as the demand for something increases and you scale up your business to fulfill it but becomes too much as there are many redundant resources. This can also cost a lot.

- Savings Plans that come with AWS are not used properly which can affect the cost as it will not minimize the total spend on AWS.

2. AWS Cost Optimization Best Practices

Here are some of the best practices of AWS cost optimization that can be followed by all the organizations opting for AWS.

2.1 Perform Cost Modeling

One of the top practices that must be followed for AWS cost optimization is performing cost modeling for your workload. Each component of the workload must be clearly understood and then cost modeling must be performed on it to balance the resources and find out the correct size for each resource that is in the workload. This offers a specific level of performance.

Besides this, a proper understanding of cost considerations can enable the companies to know more about their organizational business case and make decisions after evaluating the value realization outcomes.

There are multiple AWS services one can use with custom logs as data sources for efficient operations for other services and workload components. For example:

- AWS Trusted Advisor

- Amazon CloudWatch

This is how AWS Cost Advisor Works:

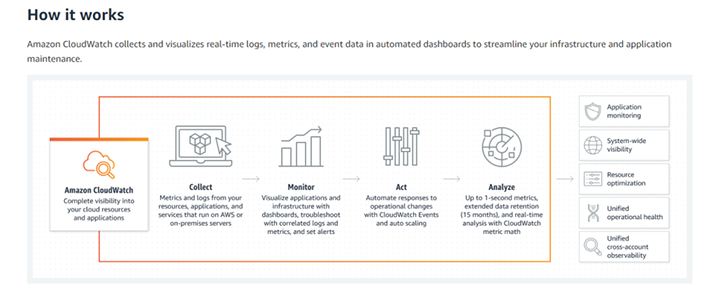

Now, let’s look at how Amazon CloudWatch Works:

These are some of the recommended practices one can follow:

- Total number of matrices associated with CloudWatch Alarm can incur cost. So remove unnecessary alarms.

- Delete the dashboards those are not necessary. In ideal case, dashboards should be three or less.

- Also checkout your contributor insight reports and remove any non-mandatory rules.

- Evaluating logging levels and eliminating unnecessary logs can also help to reduce ingestion costs.

- Keep monitor of custom metrics off when appropriate. It will also reduce unnecessary charges.

2.2 Monitor Billing Console Dashboard

AWS billing dashboard enables organizations to check the status of their month-to-date AWS expenditure, pinpoint the services that cost the highest, and understand the level of cost for the business. Users can get a precise idea about the cost and usage easily with the AWS billing console dashboard. The Dashboard page consists of sections like –

- AWS Summary: Here one can find an overview of the AWS costs across all the accounts, services, and AWS regions.

- Cost Trend by Top Five Services: This section shows the most recent billing periods.

- Highest Cost and Usage Details: Here you can find out the details about top services, AWS region, and accounts that cost the most and are used more.

- Account Cost Trend: This section shows the trend of account cost with the most recent closed billing periods.

In the billing console, one of the most commonly viewed pages is the billing page where the user can view month-to-date costs and a detailed services breakdown list that are most used in specific regions. From this page, the user can also get details about the history of costs and usage including AWS invoices.

In addition to this, organizations can also access other payment-related information and also configure the billing preferences. So, based on the dashboard statistics, one can easily monitor and take actions for the various services to optimize the cost.

2.3 Create Schedules to Turn Off Unused Instances

Another AWS cost optimization best practice is to pay attention to create schedules to turn off the instances that are not used on the regular bases. And for this, here are some of the things that can be taken into consideration.

- At the end of every working day or weekend or during vacations, unused AWS instances must be shut down.

- The usage metrics of the instances must be evaluated to help you decide when they are frequently used which can eventually enable the creation of an accurate schedule that can be implemented to always stop the instances when not in use.

- Optimizing the non-production instances is very essential and when it is done, one should prepare the on and off hours of the system in advance.

- Companies need to decide if they are paying for EBS quantities and other relevant elements while not using the instances and find a solution for it.

Now let’s analyze the different scenarios of AWS CloudWatch Alarms.

| Scenario | Description |

|---|---|

| Add Stop Actions to AWS CloudWatch Alarms |

|

| Add Terminate Actions to AWS CloudWatch Alarms |

|

| Add Reboot Actions to AWS CloudWatch Alarms |

|

| Add Recover Actions to AWS CloudWatch Alarms |

|

2.4 Supply Resources Dynamically

When any organization moves to the cloud, it will have to pay for its requirements. But for that, the company will have to supply resources that can match the workload demand at the time of the requirement. This can help in reducing the cost that goes behind overprovisioning. For this, any organization will have to modify the demand for using buffer, throttle, or queue in order to smooth the demand of the organization processes via AWS and serve it with fewer resources.

This benefits the just-in-time supply and balances it against the need to have high availability, resource failures, and provision time. Besides this, in spite of the demand being fixed or variable, the plan to develop automation and metrics will be minimal. In AWS, reducing the cost of optimization by supplying resources dynamically is known as the best practice.

| Practice | Implementation Steps |

|---|---|

| Schedule Scaling Configuration |

|

| Predictive Scaling Configuration |

|

| Configuration of Dynamic Automatic Scaling |

|

2.5 Optimizing Your Cost with Rightsizing Recommendations

One of the best practices of cost optimization in AWS is rightsizing recommendations. It is a feature in Cost Explorer that enables companies to identify cost-saving opportunities. This can be carried out by removing or downsizing instances in Amazon EC2 (Elastic Compute Cloud).

Rightsizing recommendations is a process that will help in analyzing the Amazon EC2 resources of your AWS and check its usage to find opportunities to lower the spending. One can check the underutilized Amazon EC2 instances in the member’s account in a single view to identify the amount you can save and after that can take any action.

2.6 Utilize EC2 Spot Instances

Utilizing Amazon EC2 Spot Instances is known as one of the best practices of AWS cost optimization that every business organization must follow. In the AWS cloud, this instance enables companies to take advantage of unused EC2 capacity.

Spot Instances are generally available at up to a 90% discount in the cloud market in comparison to other On-Demand instances. These types of instances can be used for various stateless, flexible, or fault-tolerant applications like CI/CD, big data, web servers, containerized workloads, high-performance computing (HPC), and more.

Besides this, as Spot Instances are very closely integrated with AWS services like EMR, Auto Scaling, AWS Batch, ECS, Data Pipeline, and CloudFormation, any company will have to select the way they want to launch and maintain the apps that are running on Spot Instances. And for this, taking the below-given aspects need to be taken into consideration.

- Massive scale: Spot instances have the capability to offer major advantages for conducting massive operating scales of AWS. Because of this, it enables one to run hyperscale workloads at a cost savings approach or it also allows one to accelerate the workloads by running various tasks parallelly.

- Low, predictable prices: Spot instances can be purchased at lower rates with up to 90% of discount than other On-Demand instances. This enables any company to have provision capacity across Spot, RIs, and On-Demand by using the EC2 Auto Scaling approach in order to optimize workload cost.

- Easy to use: When it comes to Spot Instances, launching, scaling, and managing them by utilizing the AWS services like ECS and EC2 Auto Scaling is easy.

2.7 Optimizing EC2 Auto Scaling Groups (ASG) Configuration

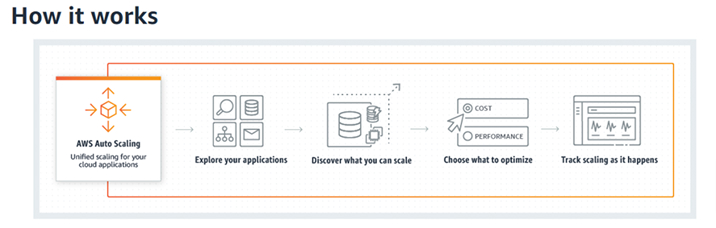

Another best practice of AWS cost optimization is to configure EC2 auto-scaling groups. Basically, ASG is known as a collection of Amazon EC2 instances and is treated as a group of logical approaches for automatic scaling and management of tasks. ASGs have the ability to take advantage of Amazon EC2 Auto Scaling features like custom scaling and health check policies as per the metrics of any application.

Besides this, it also enables one to dynamically add or remove EC2 instances from predetermined rules that are applied to the loads. ASG also enables the scaling of EC2 fleets as per the requirement to conserve the cost of the processes. In addition to this, you can also view all the scaling activities by either Auto Scaling Console or describe-scaling-activity CLI command. In order to optimize the scaling policies to reduce the cost of scaling the processes up and down, here are some ways.

- For scaling up the processes, instances must be added which are less aggressive in order to monitor the application and see if anything is affected or not.

- And for scaling down the processes, reducing instances can be beneficial as it allows for minimizing the necessary tasks to maintain current application loads.

This is how AWS auto scaling works:

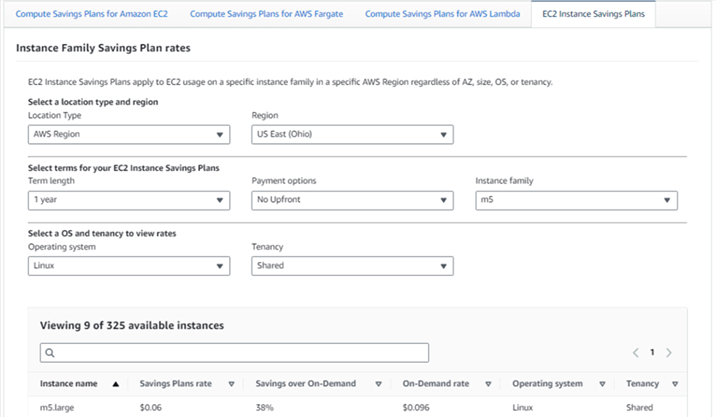

2.8 Compute Savings Plans

Compute Savings Plans is an approach that is very beneficial when it comes to cost optimization in AWS. It offers the most flexibility to businesses using AWS and also helps in reducing costs by 66%. The computer savings plans can automatically be applied to the EC2 instance usage regardless of the size, OS, family, or region of the instances. For example, one can change the instances from C4 to M5 with the help of Compute Savings Plans or move the workload from EC2 to Lambda or Fargate.

This is the snapshot of the Computation of AWS savings plan rates.

2.9 Delete Idle Load Balancers

One of the best practices of AWS cost optimization is to delete the ideal load balance and to do that initially the user needs to check the Elastic Load Balancing configuration in order to check which load balancer is not being used. Every load balancer that is working in the system incurs cost and if there is any that doesn’t have any backend instances or network traffic, it won’t be in use which will be costly for the company. This is why the first thing to do is identify the load balancer that is not in use for which one can use AWS Trusted Advisor. This tool will identify load balancers with a low number of requests. After identifying the balancer with less than 100 requests in a week, you can remove it to reduce the cost.

2.10 Identify and Delete Orphaned EBS Snapshots

Another best practice for AWS cost optimization is to identify and remove orphaned EBS snapshots. To understand this and learn how to delete the snapshots, let’s go through the below points and see how AWS CLI allows businesses to search certain types of snapshots that can be deleted.

- The very first thing to do is use the describe-snapshots command. This will help the developers to get a list of snapshots that are available in your system which includes private and public snapshots owned by other Amazon Web Services accounts. These snapshots will require volume permissions which you will need to create and then in order to filter the created snapshots, one needs to add a JMESPath expression as shown in the below commands.

aws ec2 describe-snapshots --query "Snapshots[?(StartTime<=`2022-06-01`)].[SnapshotId]" --output text

aws ec2 describe-snapshots --query "Snapshots[?(StartTime<=`2022-06-01`)].[SnapshotId]" --output text

- Now it’s time to find old snapshots. For this, one can add a filter to the command while using tags. In the below example, we have a tag named “Team” which helps in getting back snapshots that are owned by the “Infra” team.

aws ec2 describe-snapshots --filter Name=tag:Team,Values=Infra --query "Snapshots[?(StartTime<=`2020-03-31`)].[SnapshotId]" --output text

aws ec2 describe-snapshots --filter Name=tag:Team,Values=Infra --query "Snapshots[?(StartTime<=`2020-03-31`)].[SnapshotId]" --output text

- After this, as you get the list of snapshots associated with a specific tag mentioned above, you can delete them by executing the delete-snapshot command.

aws ec2 delete-snapshot --snapshot-id <value> </value>

aws ec2 delete-snapshot --snapshot-id <value> </value>

Snapshots are generally incremental which means that one snapshot is deleted which consists of data that has the reference of another one, the data won’t get deleted but will be transferred to another snapshot. This clearly means deleting a snapshot will not reduce the storage but if there is a block in the data, it will be captured and no longer will be a problem.

2.11 Handling AWS Chargebacks for Enterprise Customers

The last practice in our list to optimize AWS cost is to handle the chargebacks for enterprise customers. The main reason behind doing this is that as AWS product portfolios and features start growing, so does the migration of an enterprise customer in the existing workloads to the new products on AWS. And in this situation, keeping the cloud charges low is very difficult. And when the resources and services of your business are not tagged correctly, the complexity grows. In order to help the businesses normalize the processes and reduce their costs after implementing the last updates of AWS, implementing auto-billing and chargebacks transparently is necessary. For this, the following steps must be taken into consideration.

- First of all, a proper understanding of blended and unblended costs in consolidated billing files (Cost & Usage Report and Detailed Billing Report) is important.

- Then the AWS Venting Machine must be used to create an AWS account and keep the account details and reservation-related data in the database in different tables.

- After that, to help the admin to add invoice details, a web page hosted on AWS Lambda or a web server is used.

- Then to begin the transformation process of the billing, the trigger is added to the S3 bucket to push messages into Amazon Simple Queue Services. After this, your billing transformation will run on Amazon EC2 instances.

3. AWS Tools for Cost Optimization

Now, after going through all the different practices that can be taken into consideration for AWS cost optimization, let us have a look at different tools that are used to help companies track, report, and analyze costs by offering several AWS reports.

- Amazon S3 Analytics: It enables software development companies to automatically carry out analysis and visualization of Amazon S3 storage patterns which can eventually help in deciding whether there is a need to shift data to another storage class or not.

- AWS Cost Explorer: This tool enables you to check the patterns in AWS and have a look at the current spending, project future costs, observe Reserved Instance utilization & Reserved Instance coverage, and more.

- AWS Budgets: It is a tool that allows companies to set custom budgets that can trigger alerts when the cost increases that the pre-decided budget.

- AWS Trusted Advisor: It offers real-time identification of business processes and areas that can be optimized.

- AWS CloudTrail: With this tool, users can log into the AWS infrastructure, continuously monitor the processes, and retain all the activities performed by the account to take better actions which can help in reducing the cost.

- Amazon CloudWatch: It enables the companies to gather the metrics and track them, set alarms, monitor log files, and automatically react to changes that are made in AWS resources.

4. Conclusion

As seen in this blog, there are many different types of AWS cost optimization best practices that can be followed by organizations that are working with the AWS platform to create modern and scalable applications for transforming the firm. Organizations following these practices can achieve the desired results with AWS without any hassle and can also stay ahead in this competitive world of tech providers.

Mohit Savaliya

Mohit Savaliya is looking after operations at TatvaSoft and leverages his technical background to understand Microservices architecture. He showcases his technical expertise through bylines, collaborating with development teams and brings out the best trending topics in Cloud and DevOps.

Subscribe to our Newsletter

Signup for our newsletter and join 2700+ global business executives and technology experts to receive handpicked industry insights and latest news

Build your Team

Want to Hire Skilled Developers?

Comments

Leave a message...