In today’s AI-driven digital landscape, building software that merely works is no longer enough. As user bases grow, data volumes increase exponentially, and feature sets expand, software scalability must be one of the prime focuses in designing software architecture. The system must be capable of managing increased workloads while maintaining optimal performance simultaneously. Scalability plays a crucial role in ensuring long-term success and sustainability. Whether you’re developing startup MVPs or managing enterprise-grade software, overlooking scalability can cause performance bottlenecks, downtime, and increased technical debt. For any software development company, scalability is no longer a technical requirement but a business enabler for clients.

This blog dives deep into what software scalability means, its importance, the different types of software scalability, the common challenges teams encounter, and best practices to achieve scalability. Whether you’re a developer, architect, or product manager, understanding scalability in software is key to delivering robust, future-ready, and scalable software solutions. Let’s explore how to make software scalability a foundational pillar of your projects.

1. What is Software Scalability?

Software scalability is a system’s ability to handle increased workloads, user demands, or data volumes without compromising its performance, reliability, or maintainability. It’s about future-proofing software systems and applications to adapt seamlessly to change, minimizing the need for major redesigns, and maintaining a high-quality user experience under varying conditions.

1.1 Types of Software Scalability?

Software scalability may take different forms based on the affected part of the system. Here are the two most common software scalability types you must know.

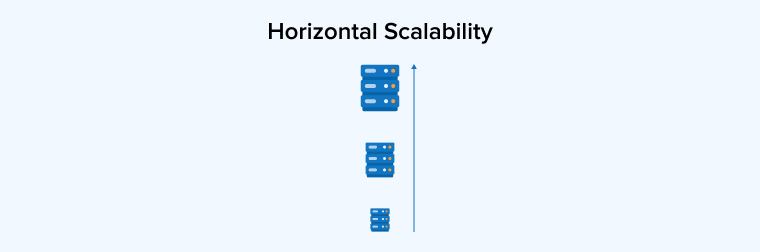

1. Horizontal Scalability

Horizontal scalability, also known as “scaling out,” refers to the ability of a software system to increase its capacity by adding more machines or nodes to the infrastructure, instead of upgrading a single server.

Advantages of Horizontal Scalability

- Fault Tolerance: Distributing workloads across multiple servers decreases the possibility of a single point of failure, enhancing system reliability.

- Unlimited Scalability Potential: Horizontal scaling enables more servers as needed, offering virtually unlimited capacity to handle higher traffic volumes or larger datasets.

- Flexible Resource Allocation: Resources can be dynamically allocated or removed based on real-time demands, optimizing operational efficiency and cost management.

- Enhanced Geographic Distribution: Scaling can be implemented across multiple regions or data centers, improving performance for global users and offering redundancy in case of regional failures.

- Cost-Effective Growth: Instead of investing in costly upgrades to high-performance hardware, as in vertical scaling, horizontal scaling leverages more affordable commodity servers or cloud instances, making it more budget-friendly and flexible.

Disadvantages of Horizontal Scalability

- Data Consistency Issues: Maintaining data consistency across distributed nodes can be difficult, especially in systems that require real-time synchronization or strong consistency guarantees.

- Higher Initial Setup and Maintenance Costs: Setting up a horizontally scalable system may involve significant time and cost to properly configure the infrastructure, networking, and automation tools.

- Debugging Complexity: It’s difficult to locate and examine bugs in a distributed setup. Understanding the interaction between nodes and error generation from those interactions is a tedious task.

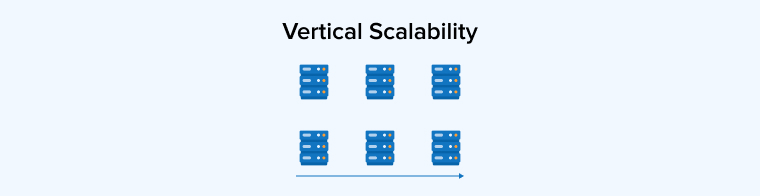

2. Vertical Scalability

Vertical scaling or “scaling up” denotes increasing the capacity of an existing node, computer, or server by enhancing its hardware resources, such as CPU and RAM of the existing system. Rather than distributing the workload across multiple machines, vertical scaling boosts the performance of the existing infrastructure to handle more users, requests, or data.

Advantages of Vertical Scalability

- Easy Maintenance: Vertical scalability involves upgrading a single server instead of managing multiple systems. Therefore, the administrator can handle maintenance and upgradation with less effort in less time.

- Improved Performance: We upgrade only the existing hardware, like the storage of the software system, increasing its computational capacity to efficiently handle increased workloads.

- Data Consistency: Since only a single server handles all data processing, it removes the possibility of data inconsistencies and simplifies data synchronization.

- Simplicity: To increase a single server’s load handling capacity, a software administrator must perform minimal modifications in the software infrastructure. Therefore, it helps in minimizing operational disruptions.

- Cost-effective: Applications demanding high computing power for short periods can benefit from vertical scaling. Investment in minimum resource purchases increases the cost-effectiveness of businesses.

Disadvantages of Vertical Scalability

- Single Point of Failure: Here, a single server manages the complete workload, increasing the chances of failure. If the server goes down, it will affect all the services relying on it and may result in potential data loss and significant downtime.

- Hardware Limitations: Servers have fixed physical limits of CPU cores and storage devices. It’s highly expensive and complicated to upgrade it beyond a limit, and may lead to operational disruptions or downtime.

- Vendor Lock-in: Changing the hardware or software provider can result in high switching expenses. Integrating new systems might require significant effort and resources, which limits flexibility.

2. Role of Software Scalability in System Design and Business Growth

The development of scalable software plays a pivotal role in both system design and long-term business growth. Let’s understand how:

- Boosts System Performance: Scalable systems enhance performance by implementing load balancing to distribute workloads across multiple servers or resources. This reduces processing delays, improves response times, and results in an intuitive and more responsive user experience.

- Maintains High Availability: Scalability helps systems remain functional during traffic spikes or hardware failures. This reliability is essential for critical applications that require continuous access to ensure uninterrupted service delivery.

- Enables Business Agility: With the ability to manage growing demand, businesses can tap into new markets, respond quickly to emerging trends, and capitalize on sales opportunities without being constrained by infrastructure limitations.

- Enhances User Experience: A scalable system consistently performs well under varying loads, reducing the chances of slowdowns or outages. This reliability leads to higher customer satisfaction and builds long-term trust and loyalty.

- Facilitates Tech Integration: Scalable architectures are designed to adapt and grow, making it easier to integrate new tools, frameworks, or platforms without needing to completely restructure the existing system.

3. When is Scaling Software Necessary?

Scaling software becomes a necessity:

- When Your Software Fails to Meet User Expectations: If users experience slow responses, crashes, or frequent downtime, it signals that the current system can’t handle the load effectively. Scaling is necessary to improve performance and deliver a smoother user experience.

- When Launching New Products or Services: Introducing new features or services often increases traffic and data processing demands. Scaling ensures your infrastructure can support this growth without causing disruptions or delays.

- When Error Rates Increase: Rising error rates often indicate that system components are overloaded or failing under increased pressure. Scaling helps distribute workloads more evenly, reducing errors and maintaining system stability.

- When an Existing System Faces Frequent Bottlenecks: Persistent slowdowns or resource saturation highlight capacity limitations within the system. Scaling addresses these bottlenecks by adding resources or spreading the load to enhance throughput.

- When You Want to Improve System Resilience: Scaling enables redundancy and failover capabilities, allowing the system to remain operational no matter even if one or the other parts fail. This increases reliability and ensures continuous service availability under stress.

4. Strategies to Maintain High Software Scalability

Scalable software solutions are advantageous in numerous ways, but how will you implement better software scalability? Take a look below at some of the best practices for getting scalable software.

4.1 Database Sharding

Leveraging cloud platforms offers on-demand resource scaling, allowing you to quickly add or reduce capacity as needed. Cloud services also provide built-in tools for monitoring, automation, and disaster recovery.

4.2 Incorporate Asynchronous Processing

Using asynchronous processing in workflows allows tasks to run independently without blocking the main process, enhancing responsiveness and throughput. It’s especially useful for handling background jobs or long-running operations. Asynchronous processing enables background task execution and efficient resource utilization through non-blocking operations and concurrency, such as multi-threading or event-driven models. Additionally, asynchronous request handling allows applications to process multiple requests concurrently, improving scalability and efficiency.

4.3 Code and Algorithm Optimization

Efficient code and optimized algorithms reduce resource consumption and speed up execution times. This helps the system handle more operations with the same hardware, thereby improving scalability.

4.4 Utilize Load Balancing

Load balancers distribute incoming traffic evenly across multiple servers to prevent overload on any single server. This ensures better resource utilization and maintains smoother performance during high demand.

4.5 Efficient Caching Mechanisms

Caching stores frequently accessed data closer to the user or application, reducing the requirement for recurrent database queries. This lowers latency and eases pressure on backend systems. As a result, the system can handle more concurrent requests without degrading performance.

4.6 Implement a Microservices Architecture

Breaking a system into smaller, independent services allows each to scale individually based on demand. This modular approach improves flexibility and reduces the risk of widespread failures. When demand increases for a particular feature or service, you can allocate more resources specifically to that microservice rather than scaling the entire application. This targeted scaling leads to better resource utilization and cost efficiency.

4.7 Adopt Content Delivery Networks (CDNs)

CDNs cache static content across geographically distributed servers, delivering it to users worldwide at high speed. This decreases latency and provides relief to the origin server during high traffic periods.

4.8 Cloud Computing

Leveraging cloud platforms offers on-demand resource scaling, allowing you to quickly add or reduce capacity as needed. Additionally, cloud services provide built-in monitoring, automation, and disaster recovery tools.

5. Common Challenges in Software Scalability

While implementing scalable solutions, you may encounter the following challenges:

5.1 Latency and Network Issues

As services are distributed across regions or data centers, the time it takes for data to travel between components increases, leading to higher latency. This can affect real-time performance, slow down user interactions, and reduce overall system responsiveness. Network instability, packet loss, or bandwidth limitations can further degrade performance under load.

5.2 Maintaining Performance and Cost Balance

Scaling up infrastructure to improve performance, such as adding servers, using premium cloud services, or adopting advanced caching, often leads to higher operational costs. Conversely, scaling too conservatively to save money can result in slow response times, downtime, and poor user experience. The goal is to find an optimal balance where resources are dynamically allocated based on real-time demand, ensuring efficiency without overspending.

5.3 Architectural Limitations

Monolithic architectures, like tightly coupled components, make it challenging to scale different entities independently. Lack of modularity increases the complexity of adding resources or handling increased load. Rigid database schemas and centralized data handling can become bottlenecks as traffic grows exponentially. Additionally, systems not designed for distributed computing often face challenges with latency, fault tolerance, and load balancing.

6. Tools to Overcome Software Scalability Challenges

Let’s discuss some tools for ensuring a scalable development process:

6.1 Redis

Redis is an in-memory data structure store commonly used for caching and message brokering. Storing frequently accessed data in memory significantly reduces database load and latency, enhancing overall system responsiveness during high traffic.

6.2 AWS Auto Scaling

AWS Auto Scaling automatically adjusts cloud resources based on demand, ensuring applications have the appropriate amount of compute power. This tool optimizes costs while maintaining consistent performance and availability.

6.3 Terraform

Terraform allows you to define infrastructure as code, ensuring consistent and reproducible deployments across varied environments. This enables easy scaling and modifying infrastructure resources without manual intervention.

6.4 Elastic Load Balancing (ELB)

ELB distributes incoming network traffic across multiple servers or instances, preventing any single node from becoming a bottleneck. It enhances fault tolerance and helps maintain a smooth user experience even during traffic spikes.

6.5 Apache Kafka

Kafka is a distributed event streaming platform that enables asynchronous communication between services. It decouples components, allowing systems to handle high-throughput data streams reliably and maintain performance during peak loads.

7. Final Thoughts

We live in a rapidly evolving digital world where scalable apps are no longer a luxury but an immediate need. Scalable systems empower organizations to respond quickly to market changes, launch new features, and maintain high availability even during peak traffic. For software development companies and teams, prioritizing scalability early in the design process helps avoid costly rework and technical debt later. By understanding and implementing scalability principles, businesses can ensure their software evolves gracefully alongside their ambitions and user needs, creating lasting value in an increasingly connected world.

FAQs

What does Software Scalability mean?

Software scalability is the system’s ability to efficiently manage intensifying workloads or a growing number of users without a loss in performance. It ensures the future growth and adaptability of the software as demand rises.

What are the key measures of scalability testing?

Software scalability is measured by evaluating how well software maintains performance as workload and user demand increase. Key metrics include system latency, response time, error rates, throughput, and resource utilization at different levels of demand.

Comments

Leave a message...