Containers and serverless technology are among the most used application deployment strategies. Each approach has advantages and downsides. The question is, Is the Serverless vs Containers contrast appealing just because a few cloud aficionados believe serverless computing can replace containers? OR Is it due to some individuals who believe serverless computing is merely another innovation that can be implemented within containers?

Understanding the benefits and drawbacks of maintaining your own containers vs serverless services will help you choose which option is best for your project.

In this post, we will evaluate these two approaches to determine which (if either) is superior. What are the shared and unique characteristics? Which is appropriate for which software use case? Let’s begin with some context.

(Note: If you specifically want to compare between AWS Lambda and Azure Functions, check this article.)

1. What are Containers?

A container is a virtualized architecture that holds both a program and the system libraries and setups required for it to execute properly. Thus, a program and all of its components are packaged into a specific box that is compatible with all operating systems.

Until the host accepts the container runtime, containerized applications are transferable and may be transferred from one server to the next. Containers are quicker and more effective than virtual machines; since just the os is virtualized in containers, making them lighter and quicker. Kubernetes, Amazon ECS, and Docker Swarm are by far the most notable container main actors.

1.1 Containerization Use Cases

Containers are optimal for Deploying applications in the following scenarios:

- If you like to utilize your preferred operating system and have complete command over the loaded computer language, computing power and software runtime version.

- Containers are an excellent starting point if you wish to employ applications with precise version constraints.

- If you are willing to incur the expense of employing large, conventional physical servers for Web APIs, machine learning calculations, and long-running operations, then you may also wish to experiment with containers that are less expensive than serverless functions

- If you want to restructure a really huge and intricate monolithic program, it is preferable to utilize a container based architecture since it is more effective for complicated applications.

- Some businesses continue to employ containers to transfer legacy applications to more contemporary settings. Container orchestration systems such as Kubernetes have predefined best practices that facilitate the management of large-scale container settings.

- Container orchestrating technologies like Docker can alleviate problems with unexpected traffic (auto-scaling), although the operation of spinning in or out containers is not quick.

2. What is Serverless?

Serverless computing is a pervasive technology in which computing resources are handled in the background, and programmers are responsible for creating code to handle particular events. This program is packed as a serverless application code and executed by the serverless runtime as often as required to fulfill the user requests.

In a conventional infrastructure as a service (IaaS) approach, the cloud provider gives virtual machines and invoices for the duration it is utilized, irrespective of the activities that are actually executing on the virtual machines. The client is accountable for managing the machine, delivering tasks to it, and adjusting its size as needed.

On the contrary, the client is solely accountable for supplying the serverless service and is unaware of the underlying computational inputs in a serverless architecture. The serverless platform dynamically allocates server resources, and clients are invoiced based on the frequency and length of their function’s execution.

Further Reading on:

Top Serverless Frameworks for Creating Serverless Applications

2.1 Serverless Use Cases

Serverless Computing is ideal for the below applications:

- If the traffic flow dynamically adjusts, not only would it be managed properly, but it would also close off if no traffic is present.

- If you are concerned about the expense of server administration and the amount of resources your software requires, serverless is an ideal solution for your business scenario.

- If you would not want to invest a lot of time considering where and how the program is operating!

- Serverless websites and apps may be developed and delivered without the need for underlying infrastructure setup. With a serverless environment, it is feasible to build a fully-functional program or website within days.

- The serverless architectures enables the development of multimedia content stateful services with enhanced efficiency for any solution. You may utilize its managed services to automatically resize photos and modify video encoding for various target screens.

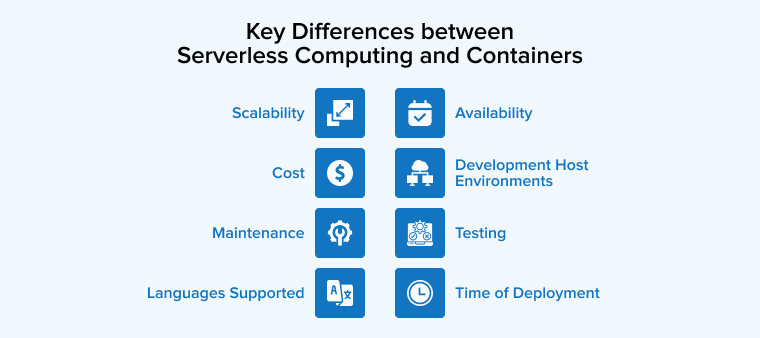

3. Key Differences Between Serverless Computing and Containers

Here are some detailed comparison factors of serverless computing and containers.

3.1 Scalability

For Serverless: In serverless deployments, a software’s backend environment expands autonomously and naturally to match the needed scalability. In addition, Serverless Computing may be contrasted to the way in which a water supply operates: your service provider turns on the valve, and your clients can collect as much quantity as they want at any moment. Consumers pay just for what they consume. This method is significantly more adaptable than attempting to purchase one barrel at a time or transport one cargo at a time.

For Containers: To use a container based architecture, it is the designer’s responsibility to decide in advance the number of containers to be installed in order to speed up the system to the intended demands. In addition, a shipping business would attempt to send extra containers to the location to accommodate the increasing demand. However, this will not be very scalable when customer demand surpasses the shipping company’s expectations.

3.2 Cost

For Serverless: Leveraging Serverless services like AWS lambda for deploying applications prevents you from incurring needless resource costs, as software product is not executed until it is requested. In lieu of this, you would be charged for the server space that your program will consume.

For Containers: Because containers are always operating, cloud providers must quote for host capacity even when no one is utilizing the program.

3.3 Maintenance

For Serverless: Maintenance of Serverless-based apps is simpler than you could ever expect. Since your serverless provider, such as AWS lambda handles administration, management and software updates for the server then the total server maintenance is reduced.

For Containers: It is different from Serverless, where a designer need not consider maintenance; it is the developer’s responsibility to maintain and upgrade multiple containers he deploys.

3.4 Languages Supported

For Serverless: Major FaaS services offer a selected number of languages, namely Node.js, Python, Java, C#, and Go (in the case of AWS Lambda).

For Containers: Containers provide diverse development settings, allowing you to operate with whatever technological stacks you choose. It may not seem like a great benefit, given that developers are proficient in numerous languages nowadays, but it is!

For microservice designs, it will not be necessary to bring language into account while recruiting for your development task. Microservices are freely accessible and extensible, with each service offering distinct module boundaries; hence, services may be built in any programming and maintained by various teams.

3.5 Availability

For Serverless: Generally, serverless processes run for a little amount of duration (milliseconds or moments) and terminate after evaluating the recent event or data.

For Containers: Containers may operate for extended durations.

3.6 Development Host Environments

For Serverless: Serverless systems are bound to the host channels that are frequently cloud-based.

For Containers: Containerized apps are compatible with contemporary Linux and Windows servers.

3.7 Testing

For Serverless: With serverless cloud services, it is typically challenging for engineers to duplicate the backend services or infrastructure in a local context because it makes testing challenging.

For Containers: Because containers operate on the very same system in which they are installed, testing a container-based application prior to sending it to operation is quite straightforward.

3.8 Time of Deployment

For Serverless: As serverless services are fewer than container microservices that don’t include system requirements, deployment of an application requires merely seconds. In addition, Serverless applications go live immediately once the program is uploaded.

For Containers: While containers need more time to establish during the early phases of the development process when they are set, deployment takes only a few moments.

4. Conclusion

The relative advantages of serverless computing and containers can offset one other’s shortcomings. Pairing these two approaches is quite advantageous.

Even if the application has a monolithic design and is too massive to operate on a serverless architecture, you may still benefit from serverless. Numerous apps contain tiny back-end activities, which are often done using chron jobs and distributed with the application. Serverless operations are suitable for these responsibilities.

Likewise, if you have a complicated containerized system and some auxiliary jobs that are activated by occurrences, you should not perform them in containers. Delegate these duties to serverless functions to relieve complexities from the containerized infrastructure, and benefit from the ease and low-costing of serverless functions.

With containers, you may develop serverless applications without difficulty. Generally, serverless processes persist info to cloud providers, which may be mounted as Kubernetes persistent volumes. This enables the integration and sharing of stateful information across serverless and container systems.

In a nutshell, it would be wise enough to leave the decision-making on your project requirements.

Comments

Leave a message...