Multithreading in C# is a powerful feature that allows multiple operations to run concurrently, improving application speed and responsiveness. It’s essential for building efficient software that can handle demanding workloads smoothly. This blog covers the key aspects of multithreading, including its advantages, the thread life cycle, implementation techniques, and best practices.

Mastering these concepts can significantly improve your development skills and application performance. If you’re working on high-performance solutions, especially as part of .NET development services, understanding how to implement and manage threads effectively is crucial for delivering scalable, responsive, and robust software applications.

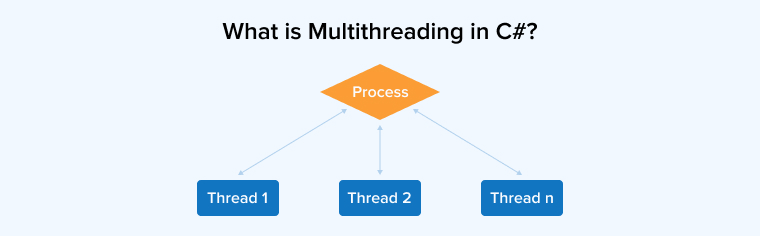

1. What is Multithreading in C#?

Multithreading in C# is the ability to execute multiple threads simultaneously within a single application. Each thread represents an independent path of execution and shares the resources, such as memory, with other threads in the same process. This allows tasks to run concurrently, improving performance and responsiveness. C# provides the System.Threading namespace to create and manage threads efficiently across various types of applications.

1.1 Advantages of Multithreading

Let’s move towards understanding the benefits that multithreading provides in C# applications:

1. Simultaneous Execution

Multithreading in C# allows multiple tasks to run concurrently, improving application performance and responsiveness. It makes efficient use of CPU resources by distributing workloads across multiple threads, reducing idle time.

2. Improved Scalability

Multithreading enables applications to handle multiple tasks or users simultaneously without significant performance degradation. It allows better use of system resources, especially on multi-core processors, making it easier to build responsive and high-performing applications that can efficiently scale with increased workload or user demands.

3. Enhanced Application Performance

Multiple operations can run concurrently, reducing wait times and improving efficiency. This approach enables faster task execution by effectively utilizing available CPU cores. As a result, it provides smoother user experiences, especially in applications that perform intensive computations or handle multiple input/output operations simultaneously.

4. Background Processing

Multithreading allows time-consuming tasks to run without interrupting the main thread. This keeps the application responsive, especially in user interfaces. Tasks such as file downloads, data processing, or logging can execute in the background, improving user experience and overall system efficiency without blocking primary operations.

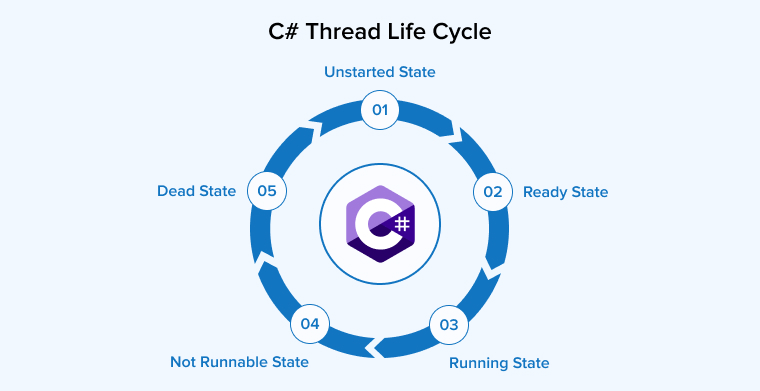

1.2 C# Thread Life Cycle

In C#, a thread goes through several stages during its lifetime, starting when a Thread object from the System.Threading.Thread class is created and ends when the thread completes its task or is terminated.

The following are the different states comprising the life cycle of a thread:

- Unstarted State: This occurs when a thread object has been created but has not yet begun running because the Start() method has not been called.

- Ready or Runnable State: The thread is prepared to run and is waiting for the CPU to assign processing time. It’s in the queue, ready to be scheduled.

- Running State: At this point, the thread has been selected by the thread scheduler and is actively executing its instructions on the CPU.

- Not Runnable State: The thread temporarily stops while waiting for input, a signal, or the completion of another task.

- Thread.Sleep(milliseconds) is called.

- The method Thread.Join() is called; this makes the calling thread wait until the other thread completes its execution.

- The thread is waiting for a lock or for an I/O operation to complete.

- Dead State: A thread enters the dead state when its task has finished or it has been stopped. This can occur naturally after the thread completes execution or due to an error. It is possible to manually end a thread using the Abort() method, but this method is not recommended as it can cause issues with resource management and application stability.

2. Ways to Implement Multithreading in C#

Here are the ways of implementing the multithreading in C#.

2.1 Create Threads in C#

The three most common ways are:

- Using Thread Class.

- Using Task Class.

- Using ThreadStart Delegate.

1. Using Thread Class

The Thread class in the System.Threading namespace enables explicit creation and management of threads. It is the most basic mechanism forcreating new threads, providing features like setting thread priorities, joining, and aborting threads.

Example:

using System; using System.Threading; class ThreadExample { static void Main() { Thread thread = new Thread(() => { Console.WriteLine("Thread is executing..."); Thread.Sleep(500); // simulate work Console.WriteLine("Thread completed."); }); thread.Start(); thread.Join(); // Waits for thread to complete before exiting main thread Console.WriteLine("Main method ends."); } } |

Output:

2. Using Task Class (Task Parallel Library)

The Task class, which belongs to the System.Threading namespace is the preferred and most relevant abstraction for writing asynchronous and parallel code. It encapsulates lower-level thread management and scales efficiently for both I/O and CPU-bound tasks.

Example:

using System; using System.Threading.Tasks; class TaskExample { static void Main() { Task task = Task.Run(() => { Console.WriteLine("Task is executing..."); Task.Delay(500).Wait(); // simulate asynchronous work Console.WriteLine("Task completed."); }); task.Wait(); // Waits for the task to finish Console.WriteLine("Main method ends."); } } |

Output:

3. Using ThreadStart Delegate

The ThreadStart Delegate is used to pass a reference to a method that matches the ThreadStart signature: no parameters and no return value to a Thread object.

Example:

using System; using System.Threading; class ThreadStartExample { static void Main() { Console.WriteLine("Main thread begins."); // Define method to be executed void ThreadMethod() { Console.WriteLine("New thread started."); Thread.Sleep(300); Console.WriteLine("New thread finished."); } // Create a new thread with ThreadStart delegate Thread thread = new Thread(new ThreadStart(ThreadMethod)); thread.Start(); thread.Join(); // Optional: Wait for the thread to finish Console.WriteLine("Main thread ends."); } } |

Output:

4. Summary Table

| Method | Namespace | Use Case | Recommended |

|---|---|---|---|

| Thread | System.Threading | Low level thread control | Fine-grained control, background tasks |

| Task | System.Threading.Task | High-level, async/parallel operations | Modern, scalable async operations |

| ThreadStart | System.Threading | Explicit delegate-based threading | Delegate-based thread execution |

2.2 Synchronizing Threads

Before understanding thread synchronization, let us first understand the concept of the critical section. The part of the code that accesses resources shared by multiple threads, such as variables and files, is known as the critical section of the code. When more than two threads attempt to access and modify shared resources simultaneously, the situation is called a race condition. There are chances of data inconsistencies in such a condition. Synchronization is important to prevent such situations.

Thread synchronization ensures that only one thread can enter the critical section of the code at any given time. This property is known as mutual exclusion. Threads gain access to shared resources based on a particular order.

Now, there are four categories of thread synchronization methods in C#:

- Blocking Methods

- Locking Constructs

- No blocking Synchronization

- Signaling

Let’s discuss some of the synchronization mechanisms from these methods with examples:

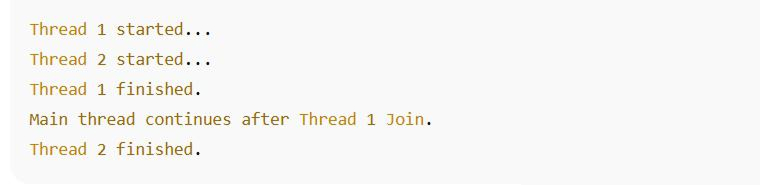

1. Join

Join is a method of the Thread class that blocks the calling thread, i.e., the thread invokes the Join method, until the thread whose Join method was called or the target thread has completed its operation and terminates.

Syntax of the Join method.

public void Join(); |

C# Program demonstrating the use of the Join method.

using System; using System.Threading; class Program { static void MyThreadMethod1() { Console.WriteLine("Thread 1 started..."); Thread.Sleep(1000); Console.WriteLine("Thread 1 finished."); } static void MyThreadMethod2() { Console.WriteLine("Thread 2 started..."); Thread.Sleep(2000); Console.WriteLine("Thread 2 finished."); } static void Main() { Thread t1 = new Thread(MyThreadMethod1); Thread t2 = new Thread(MyThreadMethod2); t1.Start(); t2.Start(); // Code that runs while both threads are working // Wait only for Thread 1 to finish t1.Join(); // Code that runs once Thread 1 finished and Thread 2 running Console.WriteLine("Main thread continues after Thread 1 Join."); } } |

Output:

2. Lock Statement

The Lock keyword is a fundamental synchronization primitive that grants exclusive access to a critical section of the code to one thread at a time. Other threads that require the same shared resource must wait in a queue until the thread holding the Lock releases it.

Syntax of the Lock statement.

lock(object) { //Statement1 //Statement2 //And more statements to be synchronized } |

C# Program demonstrating the use of the Lock method.

using System; using System.Threading; class Program { static int counter = 0; // Shared resource static object lockObj = new object(); // Lock object static void IncrementWithLock() { for (int i = 0; i < 1000; i++) { lock (lockObj) { counter++; } } } static void Main() { Thread t1 = new Thread(IncrementWithLock); Thread t2 = new Thread(IncrementWithLock); t1.Start(); t2.Start(); t1.Join(); t2.Join(); Console.WriteLine($"Final counter value: {counter}"); } } |

Output with Lock:

Output without Lock:

If you remove the Lock block, multiple threads may access counter++ at the same time, causing race conditions, and the result will be less than 2000.

3. Monitor Class

The Monitor synchronization primitive is similar to the Lock primitive but provides additional control over access to shared resources. The Monitor class includes a list of static methods like Exit(), Pulse(), Wait(), etc., to manage concurrent access effectively and prevent race conditions.

C# Program demonstrating the use of the Monitor class.

using System; using System.Threading; class Program { static int counter = 0; static object lockObj = new object(); static void IncrementWithMonitor() { for (int i = 0; i < 1000; i++) { bool lockTaken = false; try { Monitor.Enter(lockObj, ref lockTaken); // Try to acquire the lock counter++; } finally { if (lockTaken) Monitor.Exit(lockObj); // Always release lock if it was taken } } } static void Main() { Thread t1 = new Thread(IncrementWithMonitor); Thread t2 = new Thread(IncrementWithMonitor); t1.Start(); t2.Start(); t1.Join(); t2.Join(); Console.WriteLine($"Final counter value: {counter}"); } } |

4. Mutexes

Mutex, unlike Lock and Monitor, ensures interprocess synchronization. It allows only one thread from any process to access the shared resource at a time. To create a mutex, call the Mutex constructor with a unique name.

C# Program demonstrating the use of Mutex.

Mutex mutex = new Mutex(); for (int i = 0; i < 5; i++) { Thread thread = new Thread(EnterCriticalSection); thread.Start(i); } void EnterCriticalSection(object threadId) { mutex.WaitOne(); // Acquire the mutex lock try { Thread.Sleep(1000); // Simulate work } finally { mutex.ReleaseMutex(); // Release the mutex lock } } |

5. Semaphore

The Semaphore class, derived from the waitHandle abstract class, restricts the number of external threads that can access a critical section concurrently. It maintains a count representing the number of threads allowed to access a particular resource simultaneously and blocks additional threads when this count reaches zero.

C# Program demonstrating the use of Semaphore.

Semaphore semaphore = new Semaphore(2, 2); // Allow 2 threads at a time for (int i = 0; i < 5; i++) { Thread thread = new Thread(EnterCriticalSection); thread.Start(i); } static void EnterCriticalSection(object threadId) { semaphore.WaitOne(); // Acquire the semaphore try { // Critical section: Access shared resource Thread.Sleep(1000); // Simulate work } finally { semaphore.Release(); // Release the semaphore } } |

6. ManualResetEvent/AutoResetEvent

In C#, ManualResetEvent and AutoResetEvent are synchronization primitives used for communication between threads. They help control when threads should start or stop their execution. ManualResetEvent remains signaled until it is manually reset, allowing multiple threads to proceed once it’s triggered. In contrast, AutoResetEvent automatically resets after releasing a single waiting thread, ensuring that only one thread continues at a time.

C# Program demonstrating the use of ManualResetEvent/AutoResetEvent.

ManualResetEvent myEvent = new ManualResetEvent(false); // Thread 1 myEvent.WaitOne(); // Do work // Thread 2 myEvent.Set(); |

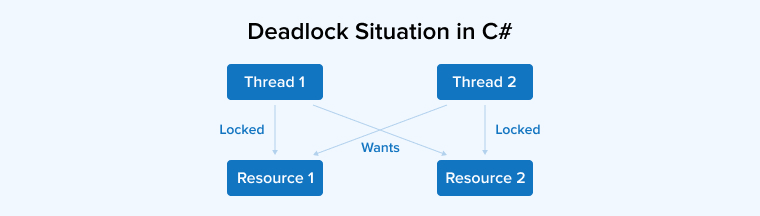

2.3 Deadlocks

Deadlock is a condition that halts the program’s execution when two or more threads are unable to complete their operations because each is waiting to acquire the resources held by the other. Three conditions must be met for a deadlock to occur:

- Mutual Exclusion: It means a resource can only be used by one thread at a time.

- Hold and Wait: A thread holds one or more resources while waiting to acquire additional resources that are currently held by other threads. It does not release the resources it already holds while waiting.

- Circular Wait: This occurs when a group of threads is waiting in a cycle, where each thread is holding a resource needed by the next thread in the chain, creating a dependency loop.

Just refer to the image below to easily understand a deadlock condition:

Here, you can see that Thread 1 is holding Resource 1 and wants to access Resource 2. Meanwhile, Thread 2 is holding Resource 2 but requires Resource 1 to proceed with its operation. This situation results in a deadlock.

C# Program Demonstrating the Deadlock Scenario

using System; using System.Threading; class Program { static object lockA = new object(); static object lockB = new object(); static void Thread1() { lock (lockA) { Console.WriteLine("Thread 1: Locked A"); Thread.Sleep(100); // Give Thread 2 time to lock B lock (lockB) { Console.WriteLine("Thread 1: Locked B"); } } } static void Thread2() { lock (lockB) { Console.WriteLine("Thread 2: Locked B"); Thread.Sleep(100); // Give Thread 1 time to lock A lock (lockA) { Console.WriteLine("Thread 2: Locked A"); } } } static void Main() { Thread t1 = new Thread(Thread1); Thread t2 = new Thread(Thread2); t1.Start(); t2.Start(); t1.Join(); t2.Join(); Console.WriteLine("Main thread ends."); } } |

2.4 ThreadPool Class

In C#, the ThreadPool class provides a managed pool of threads for running tasks asynchronously. As a result, there’s no need to create new threads for every task. It reuses existing ones, decreasing the cost of frequent thread creation and disposal. This approach improves performance and efficiency, especially in applications with numerous short-lived tasks. By automatically handling thread management, ThreadPool simplifies multithreading and ensures better resource utilization, making it a practical choice for background processing, parallel execution, and improving application responsiveness without manual thread management.

C# Program demonstrating the use of ThreadPool class.

using System; using System.Threading; class Program { static void Main(string[] args) { Console.WriteLine("Main thread: " + Thread.CurrentThread.ManagedThreadId); for (int i = 1; i <= 5; i++) { ThreadPool.QueueUserWorkItem(MyThreadMethod, i); } Console.WriteLine("Tasks submitted to ThreadPool."); Console.ReadLine(); // Prevent app from exiting immediately } static void MyThreadMethod(object state) { int taskId = (int)state; Console.WriteLine($"Task {taskId} started on thread {Thread.CurrentThread.ManagedThreadId}"); // Simulate some work Thread.Sleep(1000); Console.WriteLine($"Task {taskId} ended on thread {Thread.CurrentThread.ManagedThreadId}"); } } |

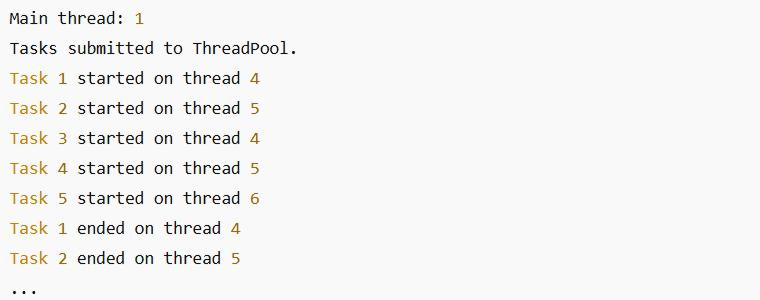

Output:

Note: The thread IDs may repeat because the ThreadPool reuses threads.

3. C# Multithreading Best Practices

Following best practices in C# multithreading ensures efficient, safe, and responsive applications. Proper thread management helps avoid issues such as deadlocks, race conditions, and resource contention during concurrent execution.

3.1 Utilize Immutable Data Structures

Using immutable data structures ensures thread safety by preventing data from being changed after creation. This avoids synchronization issues, as threads can safely read shared data without using locks. Immutable collections like ImmutableList and ImmutableDictionary help maintain consistency and reliability in concurrent applications by removing the risk of unintended modifications.

3.2 Make Use of Thread-safe Collections

The .NET Framework concurrent collections ensure safe data access across multiple threads without requiring manual locking. These collections, such as concurrent dictionaries, queues, and bags, help prevent race conditions and data corruption.

3.3 Avoid Sharing Mutable States

Sharing mutable data across threads can lead to unpredictable behavior and race conditions. To ensure thread safety and avoid hard-to-detect bugs, it’s best to eliminate or strictly control mutable shared states using synchronization techniques.

3.4 Avoid Global State

Avoiding global variables reduces the risk of race conditions and synchronization issues. Instead, keep shared data within objects and control access using locks or other thread-safe mechanisms.

3.5 Use Asynchronous Model

Asynchronous methods allow tasks to run concurrently without blocking threads or overloading the system. This approach eliminates unnecessary thread creation and keeps operations efficient by handling tasks as they complete.

3.6 Use Thread-local Storage

Thread-local storage allows each thread to have its own copy of a variable, preventing data conflicts and race conditions. This makes it easier to manage thread-specific data without requiring complex locking or synchronization mechanisms.

3.7 Adjust and Manage Thread Pool Settings

Regularly monitor and fine-tune thread pool settings to match your application’s workload. Adjust the minimum and maximum number of threads, as well as the idle timeout, to optimize performance. Use methods like ThreadPool.GetMinThreads and ThreadPool.GetMaxThreads to review the current thread pool configuration, and use ThreadPool.SetMin Threads and ThreadPool.SetMaxThreads to adjust these settings for efficient resource management.

4. Final Thoughts

Multithreading in C# is an important technique for developing fast, efficient, and responsive applications. By allowing multiple tasks to run concurrently, it helps maximize CPU usage and improve overall performance. Understanding the thread life cycle, different implementation methods, and best practices ensures reliable and safe multithreaded programming. Whether you’re working on desktop, web, or server applications, mastering multithreading enables you to handle complex operations smoothly without affecting the user experience. With the tools provided by the System.Threading namespace, developers can create scalable and robust solutions in today’s performance-driven environment.

FAQs

How will you differentiate between Multitasking and Multithreading in C#?

Multitasking runs multiple programs or processes simultaneously, while multithreading runs multiple threads within a single program. In C#, multithreading allows the concurrent execution of multiple tasks within the same application process.

Is C# Multithreaded by default?

C# itself isn’t multithreaded by default, but the .NET framework provides built-in support for multithreading.

What are the types of Threads available in C#?

In C#, there are two main types of threads: foreground and background threads. Foreground threads are responsible for running the application, while background threads automatically terminate when the main thread ends.

Comments

Leave a message...