Businesses seek to use containerization and virtualization platforms to attain greater scalability, cost efficiency, and standardized app deployment across multiple platforms. While both options help maintain the balance between meeting modern cross-platform requirements and resource optimization, each has its own pros and cons.

You should seek consultation from a top software development company to discuss your requirements and find the right fit for your project. Before that, it is necessary to have a better understanding of your options. Therefore, this blog on containerization vs virtualization aims to educate you about their benefits, limitations, key differences, and popular providers.

1. What is Containerization?

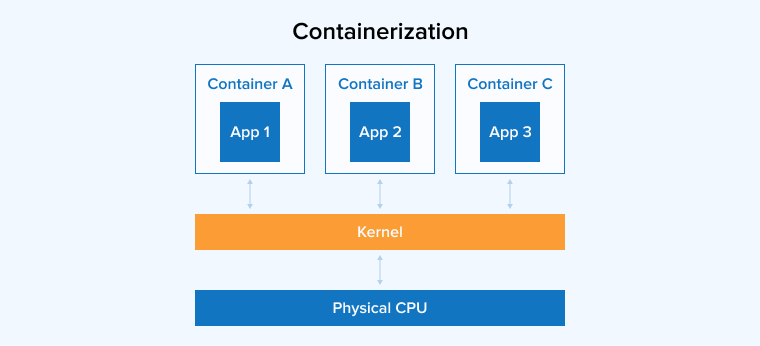

Containerization is a lightweight, OS-level virtualization that runs apps in isolated environments called containers. It doesn’t simulate an entire machine but only its OS. So, even if the code, libraries, and configurations of the apps reside in different containers, they still share the same operating system kernel. This makes your app highly portable across various environments.

Developers use container platforms like Kubernetes and Docker to break down applications into small, independently deployable components. Companies can accelerate app deployment and achieve efficient resource utilization through containerization.

1.1 What are Containers?

Containers are lightweight, portable, self-contained, and executable units used to package an app and its dependencies together. Each container stores a specific aspect of the app, which is then shipped across various environments to make the app portable.

Each container has its own isolated environment, but all containers share the same operating system kernel. So, even if various processes of the application run together in the environment, they remain separated through containers.

Thanks to containers, DevOps teams can develop and deploy code quickly, leading to faster resource provisioning. Think of containers as closed boxes instead of lightweight VMs, as they don’t simulate an entire operating system but contain different processes.

1.2 How Does Containerization Work?

Each container is a deployable unit of software running on top of a host operating system. A host can support multiple containers simultaneously. For example, containers run as minimal, resource-isolated processes in a microservices environment, where they are isolated from one another and don’t have access to each other’s resources.

The image above shows the layout of a containerized app architecture. It resembles a multi-layered cake, with the container as the top layer. The bottom layer consists of physical infrastructure such as network interfaces, disk storage, and CPU. The middle layer includes the host operating system and its kernel. The kernel acts as a bridge between the operating system’s software and hardware resources.

Just above the host OS, we have the container engine and its minimal guest OS. At the top, there are libraries and binaries for each app, along with the applications that run in their isolated user spaces. The concept of containerization evolved from a Linux feature called cgroups. This feature optimizes resource utilization and isolates operating system processes.

With the addition of advanced features for namespace isolation, including components like file systems and routing tables, cgroups evolved into Linux Containers or LXC.

1.3 Benefits of Containerization

Using containerization to deploy software apps provides an array of benefits, including:

- Efficiency: Containers are lightweight because they only bundle what the app truly needs to run. Virtual machines require a guest OS to run the app, but containers don’t. With a guest OS to boot, containers offer faster startup times and increased efficiency.

- Portability: The lightweight nature of containers makes them portable and easy to deploy. This allows developers to use agile methodologies to quickly build software that runs on various platforms.

- Scalability: Scaling containerized applications is easier compared to virtual machines. Docker Swarm, Kubernetes, and other container orchestration tools perform smart scaling to ensure all required containers keep running in the cluster.

- Resource Optimization: Containers are lightweight and run on top of the OS kernel without additional overhead. Unlike virtual machines, they don’t consume large amounts of hardware resources.

- Fault Isolation: Every container runs independently in an isolated environment. Failure in a single container doesn’t affect the entire cluster; other containers continue running normally. This allows developers to quickly identify and fix issues.

1.4 Limitations of Containerization

Containerization maintains consistency during app deployment and offers numerous benefits. However, it comes with certain limitations:

- Security: Isolation in containers is weaker than in virtual machines because all containers share the same OS kernel and components with the host OS. Attackers can potentially breach the host system through any compromised container. Therefore, the more containers you have, the higher the security risks.

- Compatibility: Containers are usually designed to run on specific container runtimes. A container packaged for one runtime may face compatibility issues if run on a different container runtime.

- Deployment Complexity: Deploying and managing numerous containers can be complicated, especially when working in a fast-changing and heterogeneous infrastructure.

- Networking: Because all containers run on a single host, they require a network bridge or a macvlan driver to map container network interfaces to host interfaces, which can make networking more complex.

1.5 Popular Container Providers

Let’s go through some of the most widely used container providers.

- Docker: Docker is a large public repository of popular containerized apps. It is one of the most widely used container runtimes. Docker Hub allows you to quickly download and deploy containers to a local Docker runtime.

- rkt: Pronounced as “Rocket”, rkt is a security-focused container runtime that doesn’t allow any insecure functionality unless the user explicitly grants permission. rkt effectively addresses the problem of cross-contamination among containers, which can be a serious issue in other container runtimes.

- Linux Containers (LXC): Developers use this open-source Linux container runtime system to isolate OS-level processes from each other. Docker originally used LXC as its default backend runtime. The main purpose of LXC is to provide a vendor-neutral, open-source container runtime.

- CRI-O: It implements Kubernetes’ Container Runtime Interface (CRI) to enable the utilization of OCI-compatible runtimes. CRI-O is a lightweight alternative to Docker as a runtime in Kubernetes environments.

2. What is Virtualization?

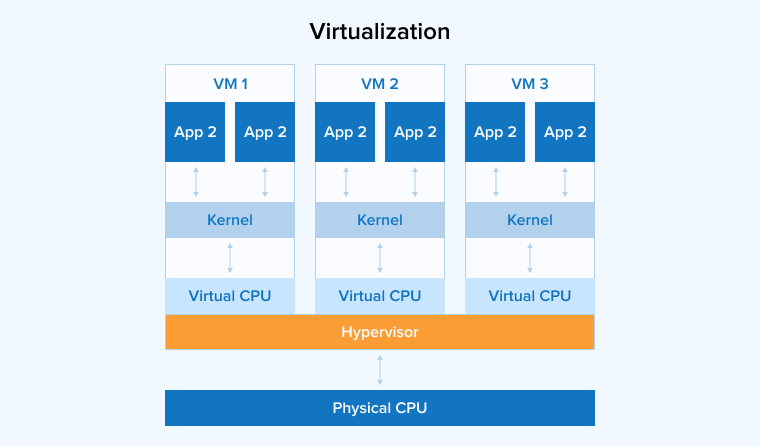

Virtualization is a technology that simulates physical hardware like disks, CPU cores, etc, representing them as separate virtual machines. Every virtual machine has its own drivers, processes, kernel, and guest operating system. Every virtual machine runs in parallel on the same server. KVM, VirtualBox, and VMware are some of the most popular virtualization software.

2.1 What is a Virtual Machine?

A virtual machine is a simulation of a physical machine. It is actually a guest operating system running on top of the host OS, which runs on actual hardware. VMs have the same apps, network interface, OS, and components as a physical machine.

Typically, an actual computer can run multiple virtual machines along with their isolated components. Virtualization allows multiple applications to run on a single physical server, with each application operating in its own VM. But a host operating system needs a hypervisor to run the VMs. It offers a layer of virtualization with a few simulated hardware. Hypervisor separates the guest OS and allocates storage, memory and processors to prevent it from behaving like the standard OS.

2.2 How Does Virtualization Work?

Hypervisor software makes virtualization possible. It can be installed directly on the hardware or on top of a host operating system. Hypervisors divide the physical resources to use them in virtual environments.

When a virtual machine issues an instruction that requires physical resources, the hypervisor forwards the request to the physical system and tracks any resulting changes. There are two main types of hypervisors, Type 1 (bare-metal), which runs directly on the host hardware, and Type 2 (hosted), which runs on a host operating system.

Allowing multiple operating systems to run on the same hardware is virtualization’s key feature. The guest operating system in each VM handles essential activities like loading the kernel and bootstrapping. However, strict security measures restrict guests’ access to the host to ensure they cannot gain full control.

2.3 Benefits of Virtualization

Using virtualization helps reduce hardware costs and improve deployment speed and infrastructure flexibility. There are many more benefits, like:

- Reduced Operational Costs: Server virtualization lets you run multiple virtual machines (VMs) with different apps and operating systems on the same physical system. You can allocate five VMs on a single host instead of using five physical servers, which reduces overhead costs and saves money.

- Improved Productivity and Efficiency: Fewer physical servers make maintenance and management easier. This lets administrators and developers to focus more on monitoring the apps and services to improve productivity and efficiency.

- Scalability: You can quickly create new virtual machines to meet increasing demand. Cloning or provisioning a VM can take only seconds; the main limit is the capacity of your hardware and underlying infrastructure.

- QA Testing: VMs provide consistent, isolated environments for testing. The apps and services mostly run the same on VMs as they do on physical servers, so developers can test performance and functionality across different virtual environments on the same host before deploying to production.

- Minimal Carbon Footprint: Virtualization lowers the number of physical servers required, which reduces the power consumption and cooling needs and decreases an organization’s carbon footprint.

2.4 Disadvantages of Virtualization

Virtualization offers several beneficial capabilities on a single physical server, but there are certain drawbacks. Below are the most common disadvantages of using virtualization for your development project.

- Security Risks: Virtual instances are often hosted on public clouds, which can be vulnerable to data loss or breaches. Multi-tenant infrastructure increases the risk that kernel or data leaks could affect other users. Proper isolation, patching, configuration management, and strong access controls are essential to reduce these risks.

- Performance Lag: If a server has limited resources and virtual machines are overprovisioned, app performance will decline because all virtual machines share the host’s resources. Careful capacity planning and resource limits/quotas help mitigate this issue.

- Virtual Machine Sprawl: VM sprawl happens when the number of virtual machines grows beyond the IT team’s capacity to manage them efficiently. This problem grows gradually as unused or forgotten VMs accumulate; those VMs waste disk space and other resources.

- High Initial Setup Costs: Virtualization in enterprises can have high setup costs, including licensing. For example, some enterprise licenses (such as certain VMware products) can be expensive, which may be prohibitive for a small business. Even with substantial upfront costs, ongoing operational costs also apply, so the ROI of virtualization can take years.

2.5 Popular Virtual Machine Providers

Let us take a look at the most widely used virtual machine providers.

- VirtualBox: Created by Oracle, VirtualBox is an open-source x86 virtualization platform. It offers an ecosystem of complementary tools to help developers build and deploy VM instances.

- VMware: VMware was one of the pioneers of x86 hardware virtualization. It provides hypervisors for deploying and managing multiple virtual machines. VMware provides options supporting enterprises and has a very powerful graphical user interface.

- QEMU: QEMU is a robust emulators and virtualizers that can run multiple operating systems on a single machine. It is primarily a command-line tool and doesn’t include a native GUI for execution or configuration. QEMU is open-source and, when used with KVM on Linux, can provide vary high performance.

3. Containerization vs Virtualization: Comparison at a Glance

We explained how containerization and virtualization work and reviewed their benefits and drawbacks. Now, it’s time to compare them against critical factors to understand the differences between them.

| Factors | Containerization | Virtualization |

|---|---|---|

| Overhead | Because containers share the host OS kernel, they don’t require a full guest OS. They are lightweight and consume fewer resources. | Virtual machines run a full guest OS, which increases overhead, especially when many VMs run on the same host. |

| Startup Time | Containers start quickly because they don’t need to boot a complete operating system. | Virtual machines take longer to boot because each VM starts its own OS. |

| Portability | Containers package the application and its dependencies into a single unit and run on any host that supports the container runtime, giving high portability. | Virtual machines are portable at the image level but are more dependent on hypervisor compatibility. |

| Isolation and Security | All containers are isolated from each other, but share the same host OS kernel. So, even if one container is breached, it might leak into another. | Virtual machine is completely isolated from the host OS; so, even if one VM is breached, it cannot affect others. However, complete isolation cannot be guaranteed because hypervisor vulnerabilities, misconfiguration, or shared resource attacks can allow cross-VM or host compromises. |

| Scalability and Management | Due to they are lightweight, containers are easy to scale and manage, even for complex applications. | Virtual machines are scalable but more resource-intensive and have longer startup times, which makes them less suitable for microservices and distributed applications. |

| Deployment | Docker for deploying individual containers, and Kubernetes for orchestrating multiple containers across systems. | Every virtual machine runs on a hypervisor, which hosts guest operating systems. Some hypervisors are bare-metal (Type 1), while others run on top of a host operating system (Type 2). |

| Virtual Storage | Uses the local hard disk for storage on each node and SMB for shared storage across multiple nodes. | Uses virtual hard disk for individual VMs and SMB for shared storage across multiple servers. |

| Load Balancing | Container orchestration platforms such as Kubernetes provide built-in load balancing to distribute workloads across containers, optimizing resource utilization and ensuring application responsiveness. | Virtualization environments use load balancing across failover clusters to distribute workloads among virtual machines, helping to optimize resource usage and maintain high availability. |

4. Conclusion

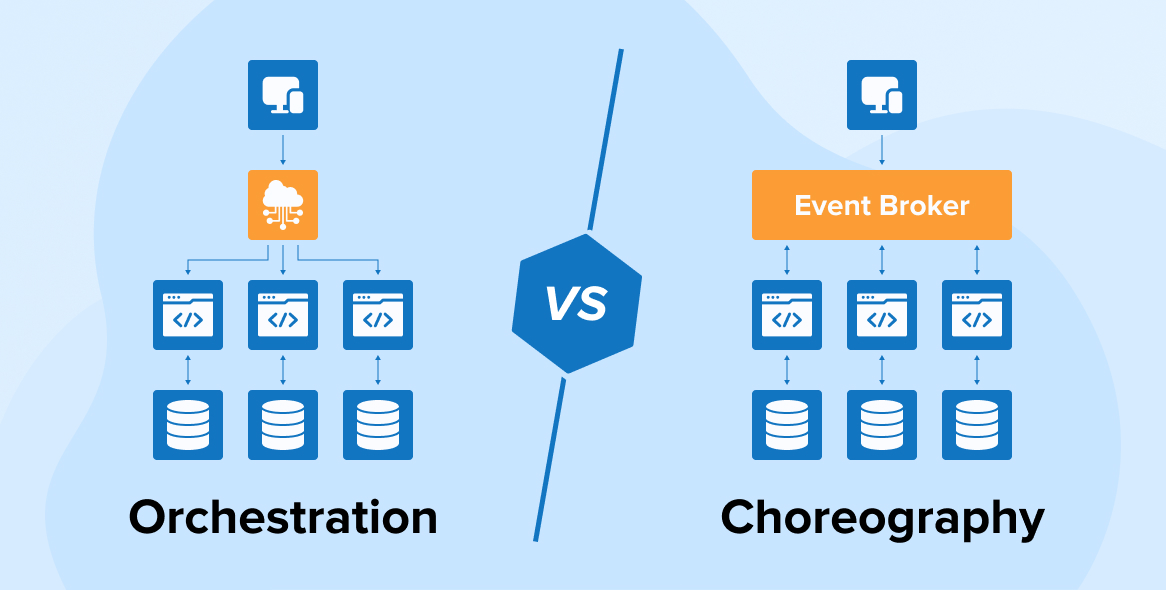

Containerization and virtualization are both robust techniques for infrastructure optimization and app deployment. Containerization offers better resource efficiency and portability, while virtualization provides stronger security and isolation. Along with this containerization is well-suited for building lightweight, scalable, and cloud-native apps and often improves performance. It is also commonly used to implement microservice architectures.

Meanwhile, virtualization is useful for building high-security apps in sectors such as healthcare and finance. It allows businesses to run apps in isolated environments to reduce security risks. When choosing between the two, consider project goals, available resources, and application requirements to select the most suitable option.

Comments

Leave a message...