Businesses are increasingly adopting AI/ML technologies to enhance operations and stay ahead of the competition. They need a robust programming language that enables data scientists to use large datasets to build and train desired machine learning models. For model development, Python has become the go-to option for many developers and businesses worldwide.

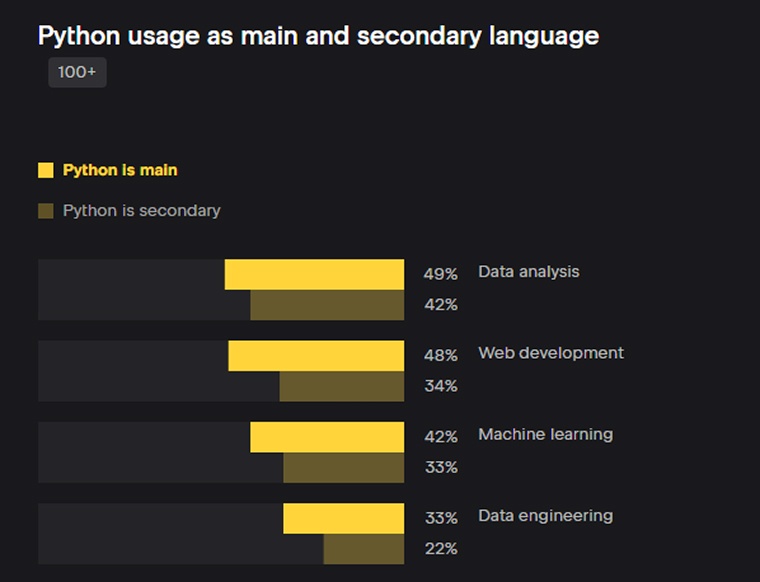

As we will see in this blog, there are many reasons to choose Python for ML, but the most important is its abundance of open-source libraries. They offer ready-made machine learning algorithms that allow users to run a wide range of data science operations for ML and deep learning (DL) models. This blog explores the best Python libraries for machine learning. According to the 2024 Python developer survey, 42% of Python users report using it as their main programming language for machine learning.

1. Why Use Python for Machine Learning?

Python is an ideal choice for machine learning because of numerous reasons, a few of which are discussed below:

1.1 Ease of Use

Python has a simple, easy-to-read syntax, which makes it ideal for beginners. It allows you to write interactive and descriptive code for designing reliable systems. Developers and data scientists can leverage the language to build prototypes and validate ideas. A readable syntax helps users learn, interpret, and understand code quickly, leading to an accelerated development process.

1.2 Feature-rich Libraries

Python provides a wide array of libraries like NumPy and TensorFlow that offer prebuilt features. These libraries streamline development because developers can leverage existing features instead of building everything from scratch, accelerating time to market. Thanks to Python libraries, developers can easily focus on building the desired product rather than reinventing common components.

1.3 Vibrant Community

Python is an open-source programming language, so it is easily accessible. This accessibility is one of the main reasons Python has a large, active global community. Moreover, no specific skill set is required to begin using the language. It is easy to find Python resources online and implement them on personal and commercial projects. Additionally, if you face any difficulty with the project, online forums and community experts are eager to help guide you to success.

1.4 Scalability and Performance

When it comes to code execution, Python is not the quickest language. However, its frontend libraries are optimised for high performance. Integrating them with backend languages like C and C++ can get you the desired results.

For example, when libraries like TensorFlow and NumPy execute commands, the code functioning on the backend is already compiled. This is why Python is suitable for training large neural networks and performing calculations on big datasets.

Meanwhile, in terms of scalability, Python outshines most programming languages. When combined with cloud-scale deployments and parallel computing tools, Python helps build robust enterprise-level machine learning workloads. It allows horizontal scaling through cloud-based architecture and can easily manage batch-inference pipelines optimized for high performance.

1.5 Platform Independence

Python allows you to build solutions that can run on any platform or operating system, such as Mac, Windows, Linux, etc. This cross-platform capability is another reason that makes Python a suitable option for building machine learning applications. Just write the code once, and you can run it anywhere, without needing platform-specific modifications.

1.6 Interoperability

Python offers seamless integration with multiple programming language modules like C and C++. This helps Python developers to write optimized code that can communicate and fetch data from other systems to perform intensive computational operations.

2. The Best Python Libraries for Machine Learning

Using Python for machine learning and deep learning projects is an easy choice; the real challenge is picking a suitable Python library. The programming language offers a wide range of libraries for writing ML code, varying in scope and quality. Let us browse through the best options and discuss their features in brief to determine the right Python library for your machine learning project.

2.1 NumPy

In 2005, Travis Oliphant developed a library called NumPy (Numerical Python), which provides a comprehensive collection of arrays and matrix data structures and simplified numerical operations for Python developers. NumPy acts as a foundation for many modern ML libraries, including TensorFlow. It is an open-source library for storing and manipulating n-dimensional arrays and provides computational capabilities for handling large datasets and deriving insights for data science and machine learning.

Key Features

- Robust N-Dimensional Arrays: NumPy provides an N-dimensional array object, enabling Python programmers to work with efficient, fixed-type arrays. These arrays support many computational tasks and manage large, homogeneous datasets effectively.

- Numerical Computing Tools: NumPy provides many computing tools that help carry out various mathematical operations, like statistical tasks, Fourier analysis, linear analysis, array manipulation, and more, on arrays. This feature also simplifies complex numerical operations,

- Interoperable: NumPy integrates seamlessly with other Python libraries used for machine learning, data manipulation, data visualization, etc, allowing Python developers to perform comprehensive data analysis and scientific computation.

2.2 TensorFlow

Google created an open-source Python library called TensorFlow in 2015 to build neural network applications and large-scale systems. Automatic differentiation in TensorFlow helps optimize the training and performance of deep learning models. TensorFlow provides access to probability distributions like Gamma, Chi-square, and Bernoulli. This Python library is an ideal choice for distributed computing and parallel processing, thanks to its faster and accurate data processing capabilities. Using the library effectively requires a solid understanding of deep learning methodologies to use this library.

Key Features

- Parallel Neural Network Training: TensorFlow supports pipelining and multi-GPU training to improve efficiency for large-scale systems.

- Visualizer: TensorFlow provides a visualization tool called TensorBoard that can easily represent the same data in different ways. It also allows you to make code modifications and deploy them during the active debugging process.

- Event Logger: TensorFlow records event summaries that TensorBoard consumes to visualize computational graphs and to show scalar, histogram, image, and other outputs over time.

2.3 Keras

Keras is an advanced API written in Python for building and training deep learning models with minimal code. It is easy to use and offers various functions for defining and optimizing multi-input and multi-output ML models, as well as functions for data preprocessing and loading tasks for predictions and analysis. Keras helps Python developers with tasks like speech recognition, image classification, language generation, and more. When using Keras, you can choose any backend you want, because it is compatible with multiple backend systems.

Key Features

- User-Friendly Interface: Keras comes with a consistent and straightforward interface optimized for common use cases. Clear and actionable error messages make the development and debugging process easy.

- Modularity: The modular nature of Keras helps streamline the development process. Developers can create apps by combining modules such as initialization schemes, optimizers, cost functions, and neural layers. The modularity also provides better scalability. By adding new modules, you can make Keras capable of advanced research and development.

- Multi-Backend Support: Keras is compatible with multiple backend systems, like Theano and TensorFlow. This gives you the flexibility to choose a suitable framework.

2.4 PyTorch

PyTorch is an open-source machine learning library, originally based on the Torch framework. It easily integrates with other Python libraries and is popular for generating dynamic computation graphs that can change during execution. The library is largely used for text processing using RNNS and building computer vision processing and NLP apps.

Using GPU acceleration, PyTorch allows for faster computation and optimization of machine learning and deep learning models. It adopts a straightforward approach to building neural networks; hence, it is easy to learn.

Key Features

- Dynamic Computation Graph: This feature lets you create dynamic graphs while the Python program is running. As a result, you have easier debugging and efficient experimentation. This allows developers to make changes to the parameters and network architecture without restarting the training of ML models.

- Pythonic API: This API is user-friendly and easily accessible. Python developers use this concise and intuitive API to express complex models with very little code.

- GPU Acceleration: PyTorch integrates seamlessly with GPUs, whose parallel processing capabilities accelerate training on large datasets.

2.5 XGBoost

eXtreme Gradient Boosting, commonly known as XGBoost, is a portable, flexible, and efficient Python library optimized for distributed gradient boosting. The library implements a parallel tree boosting algorithm that improves computational speed and efficiency. This is the reason why XGBoost is widely accepted around the world to solve complex industrial problems. Working on the principle of gradient boosting, XGBoost’s algorithm combines multiple weak learners to create a robust and high-performing model.

Key Features

- Automates Missing Data Handling: XGBoost can identify and process missing values automatically, allowing models to make accurate predictions without comprehensive data cleaning. If your team is working with an incomplete dataset, then this library is useful.

- Advanced Regularization Techniques: XGBoost supports built-in L1 and L2 regularization to control model complexities. These regularizers help prevent overfitting and enable the construction of robust, generalizable predictive models.

- Column Block for Parallel Learning: XGBoost uses in-memory units called blocks to store data. Every block consists of data columns organized according to the feature value. It needs computation once before training begins and is reusable, which helps save sorting costs.

2.6 Scikit-learn

Scikit-Learn is built upon SciPy, NumPy, and Matplotlib, providing numerous tools for ML operations like dimensionality reduction, clustering, regression, and classification. Being an open-source Python library, Scikit-learn is widely used for data mining and data analysis. With a user-friendly and intuitive interface, it is easily accessible to beginners while remaining a powerful tool for experts.

This Python library provides a multitude of algorithms for creating ML models. Additionally, scikit-learn includes utilities for model selection, evaluation, and data preprocessing to streamline the development and deployment of models. Scikit-learn is a Python library specializing in data modelling, enabling developers to effectively share and manipulate data.

Key Features

- Feature Extraction: This feature from sciKit-learn allows users to extract features from uploaded text and images.

- Cross-validation: This module enables validation of a model’s performance on unseen data.

- Diverse Algorithm Portfolio: scikit-learn comes with a rich and diverse portfolio of ML algorithms, ranging from simple decision trees to complex ensembles. No matter what machine learning challenge you face, this library has a suitable algorithm for it.

2.7 Theano

Theano is one of the popular Python machine learning libraries comprising ready-made tools for defining, executing, and optimizing mathematical expressions and matrix calculations in multi-dimensional arrays to create ML models. Theanos has the capabilities to detect and diagnose different types of errors. Therefore, many QA experts prefer using it during self-verification and unit testing. Theano is quite a versatile library that can be used for both small and large-scale projects.

Users can run data-intensive computations up to 140 times faster by using Theano with GPUs instead of CPUs. Supporting seamless integration with NumPy and other native libraries, Theano allows easy acceptance of the structures and transforms them into efficient code.

Key Features

- Symbolic Expression Compilation: You do not need to write formulas in Theano explicitly. It allows you to define mathematical expressions using symbols without immediate evaluation and then compiles those symbols into low-level code to speed up the computation process.

- Optimized Computation Graphs: The library creates a computation graph from symbolic expressions and implements different optimizations like operation fusion and algebraic simplifications. It helps eliminate unnecessary calculations and enhances the runtime performance.

- Automatic Differentiation: In machine learning, gradient-based optimization methods require computing derivatives of expressions with respect to variables; Theano performs this differentiation automatically.

2.8 OpenCV

Open Source Computer Vision, or OpenCV, is a comprehensive library that provides numerous built-in functions for computer vision tasks. Python developers can use it to create apps that accurately process a variety of image and video inputs. Objects in images or videos can be diagnosed and analyzed with high precision. OpenCV allows real-time image and video capture and saving, and the library is backed by an active online community.

Key Features

- Camera Calibration: OpenCV provides tools to calibrate a camera, allowing developers to correct lens distortion and obtain precise measurements from images. This feature is especially useful in AR and 3D modelling apps.

- Image and Video I/O: This Python library offers a comprehensive set of built-in functions to read, write, and manage videos and images. Load an image or video from a source, implement the necessary changes, then save the result in a suitable format.

2.9 H2O.ai

H2O.ai is an open-source library for building AI, machine learning, and deep learning apps. It provides algorithms like generalized linear models and gradient boosting. The library is not exclusive to Python; however, it includes a module called h2o-py that offers Python-based interactions with H2O clusters, enabling users to train, test, and launch machine learning models using Python. Both experienced data scientists and organizations without deep technical expertise can benefit from H2O.ai’s robust capabilities.

Key Features

- Distributed Computing Support: H2O.ai is capable of managing large datasets across different devices, as it supports distributed computing.

- Model Interpretability Tools: This Python library provides tools that help users understand how machine learning models make predictions, which is necessary for industries like healthcare and finance that prioritize transparency.

- AutoML Capabilities: H2O.ai includes built-in functionality to automate model selection, training, and tuning. As a result, it makes building high-quality models easier without requiring extensive domain expertise.

2.10 Pandas

Pandas provides data structures that allow efficient organization and manipulation of large amounts of tabular data. It also offers numerous tools for reading and writing data to and from different databases and file formats. Pandas emphasizes the quality of initial input, which enables developers to create AI/ML applications. The library is popular for its data analysis capabilities and is highly used in fields like marketing, finance, and economics. Pandas can also perform data alignment as well as manage missing data. It seamlessly integrates with scikit-learn for model generation and with Matplotlib for data visualization.

Key Features

- Data Cleaning: This feature declutters datasets and addresses irregularities and missing values to ensure your AI model receives high-quality data.

- Data Filtering and Selection: Pandas supports a wide array of data filtering and selection functions suitable for many granular conditions. Even when working with complex datasets, users can accurately extract the required information.

- Merging and Joining: Pandas makes it simple to combine different datasets, expanding analytical scope and providing a more holistic view.

2.11 Seaborn

Built on Matplotlib, Seaborn is a Python data-visualization library that provides a feature-rich interface for creating visually appealing and statistically informed plots. It often requires simpler commands and less code than Matplotlib, saving time and effort. Seaborn’s capabilities to produce sensible, informative visualizations make it a popular choice for many machine learning and deep learning projects.

Key features

- Advanced Plot Types: Seaborn offers specialized plots, including regression plots, pair plots, violin plots, and heatmaps, to help users understand correlations and distributions within datasets.

- Support for DataFrames: Seaborn integrates seamlessly with pandas DataFrames, eliminating the need for manual data manipulation and enabling direct plotting from structured data.

- Built on Matplotlib: Although built on Matplotlib, Seaborn provides a simpler syntax, additional color palettes, and themes for creating aesthetically appealing,easy-to-use statistical graphics.

2.12 LangChain

LangChain guides Python developers through integrating their apps with LLMs. The library provides a way to link the machine learning model with multiple data sources, like other apps, device files, and the internet. This helps make information easily accessible to your models, improving their flexibility and functionality. LangChain simplifies the complex process of implementing LLMs while remaining compatible with most Python libraries. Moreover, developers use the library to chain various components together, including databases, APIs, and memory, when creating interactive and dynamic AI solutions.

Key Features

- Memory for Context Retention: This feature helps chatbots remember past interactions.

- Retrieval-Augmented Generation (RAG): This feature helps improve AI/ML model responses by retrieving external data from multiple sources in real-time.

- Workflow Orchestration: LangChain chains the AI-driven processes together, enabling complex automation and making LLMs more dynamic, useful, and context-aware in real-world apps.

2.13 LlamaIndex

LlamaIndex is a Python library for machine learning models that enables the retrieval, processing, and generation of responses from both structured and unstructured data. It helps improve the querying and indexing processes and efficiently handles training datasets, allowing models to fetch accurate information quickly. LlamaIndex also supports seamless integration with multiple data sources and leverages a model’s computational capabilities to deliver enhanced performance.

Key Features

- Data Indexes: This feature connects the raw data with LLMs so that models can provide relevant responses. Data indexing structures ingested data as graphs, lists, and trees, making it easy for LLMs to search and retrieve information.

- Query Engines: Once the non-essential information is filtered out, the query engines process the user queries to interpret intent and identify the most relevant data for retrieval. This feature is critical for models to generate context-based responses.

- Data Connectors: It manages structured and unstructured data to format the datasets and integrate them into Llama Index. This feature extracts required data from multiple sources, such as cloud storage, PDFs, and APIs, to build a large dataset for the ML models.

3. Conclusion

This list highlights some of the most popular and widely used Python machine learning libraries; many more options are available. Each library has its pros and cons and supports a different scope of functions for solving specific problems. However, you must have clarity about business objectives and project requirements to pick an appropriate Python machine learning library.

Beyond selecting libraries, it is also necessary to understand the underlying algorithms and the principles that they operate on. That understanding improves execution and helps produce high-quality outcomes.

FAQs

What is better, PyTorch or TensorFlow?

For generic functions, both PyTorch and TensorFlow work with similar accuracy. The real difference lies in the training time; TensorFlow requires more training time but has a lower memory usage. Meanwhile, TensorFlow allows for quick prototyping and is more suitable for neural networks that need custom features.

Comments

Leave a message...